Artificial Intelligence (AI) methods or techniques have been actively explored and used for a long time, but the last decade has seen a surge of very successful and profound applications thanks to the rapid advances in deep convolutional neural networks, the availability of large and high-quality data sources, easy access to high-performance computing via cloud computing, new demands in the increasingly digital society, etc.

It has been well known that AI (including machine learning – ML, especially deep learning) is very capable of processing datasets that exhibit strong spatial, temporal, visual, audio, linguistic or mathematical patterns about many of our natural and socioeconomic systems, and can reveal new insights. In the geospatial field, GIS and remote sensing software (e.g. ArcGIS, SuperMap, ENVI and ERDAS IMAGINE) each is equipped with some popular AI and deep learning methods.

To implement a full AI application project at scale, from ingesting very large datasets to applying adapted deep learning methods to finally achieving consistent objective results, is not a small task. We have been routinely analysing satellite and aerial imagery to extract image features and create thematic layers (e.g. land covers, buildings, trees and vegetation – link 1, link 2), and in this blog, we report our latest processing service of using new AI and deep learning techniques to extract features of the built environment (specifically, buildings) from high-resolution imagery for very large geographic regions.

1. Processing Workflow

Input imagery and other geospatial data: We work with various inputs and data sources:

-

Imagery (single band, 3-band RGB, 4-band RGB & NIR, multispectral, hyperspectral and SAR)

-

LiDAR point clouds, DEMs and other geospatial layers

-

Imagery directly licensed from about 10 international vendors (who can supply sub-meter resolution satellite imagery) and domestic vendors (who can supply very high-resolution aerial and drone imagery). Comprehensive imagery comparisons and evaluation can be performed if required.

-

Open imagery (archival or fresh) from local, state and national government agencies.

Methods: It is usually needed to customise, adapt and enhance standard AI and deep learning methods in line with the task at hand and our understanding of the features to be classified to achieve greater effectiveness and efficiency. There is no single genetic model to suit all tasks. Often we have to develop a large number of customised models to suit various inputs.

High-performance computing: This used to be a barrier for many but nowadays becomes very accessible via cloud computing. We extensively use cloud services from GCP, AWS and Azure.

Geospatial pre- and post-processing: An organic set of tools to automate the processing of geospatial inputs and outputs for AI and deep learning models have been developed. Typically, our inputs are in GeoTIFF format (for raster data) and outputs in Shapefile format (for vector data).

Quality control: Prediction accuracy from models may be very high, but for certain tasks we still need to apply various post-processing and manual interpretation to improve initial predictions and make sure an extremely high accuracy is achieved in output delivery.

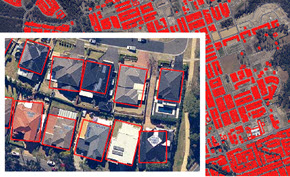

Figure 1: An AI-based image analysis turning RGB input imagery into a predicted probability map which suggests where building roofs are likely located. Red colours represent probabilities close to one. From the prediction map, generalised building outlines can be derived.

Figure 2: Example of extracted building outlines (in the form of minimum bounding box) for an area in Sydney, Australia.

2. Some Observations and Critical Views

Software vendors may offer promotional views or demos on how simple, easy or straightforward an AI/ML workflow is. But the reality is far more complex than that. Here we share some observations and critical views about this type of image analysis:

-

Understanding of features on the ground is paramount. For some localities, no single building is similar and building characteristics can be extremely heterogeneous. One could quickly realise this if he/she performs some manual digitising over imagery. A separate house may have various attached/detached parts, an outbuilding, a shed or a granny flat. As a result, building outlines or footprints to represent ground truth have to be generalised in digital manipulation.

-

Understanding of features in image representation is also critical. While many reckon deep learning is a data-driven technique, domain knowledge from subject experts especially in preparing inputs, adapting models and interpreting results can be instrumental.

-

When preparing training and validation datasets, it is necessary to have a large number of samples to sufficiently represent all features of interest. But if the study area is small and all features are very homogeneous, the requirement on the size of training and validation samples can be far less strict.

-

Many model parameters including those from data augmentation to reducing spatial uncertainties in prediction have to be adjusted or controlled. This, along with the usually large-sized dataset and the powerful computing required, can make the whole analysis complex and resource intensive.

-

This type of image analysis is sensitive to almost every aspect of the input. If the input imagery is not normalised, chances are that prediction results will not be consistent or robust even after applying many dozens of pre-trained models. This has implications for implementing related autonomous analysis and decision systems. Over the past two decades there have been various bold proposals on the extraction of a full set of building characteristics from high-resolution imagery using automated workflows, mainly from imagery vendors, but so far few have been put into practice. In the case of rapid damage assessment after disasters using high-resolution imagery and AI-based analysis, this article from WSJ provides a realistic assessment and serves as a warning (link).

-

Accuracy assessment is up to a whole range of factors, e.g. the size of validation and testing samples, the quality of ground truth data, the number of features classified, and the reporting metrics used. One may achieve a very high overall accuracy from an automated workflow, but it is still important for developers to report such metrics to end users, like what all referred papers show in this major application field . Otherwise, some may wrongly assume a perfect accuracy is there without any caveats or questions, and this implicit assumption could make or break an intended application. Extensive post-processing is usually needed to make sure all predicted features verifiable (i.e. “seeing is believing”). A case in point, some buildings might be partially and completely obscured by trees and it is impossible to achieve a perfect accuracy without any manual interpretation.

3. Creating Innovative Applications

New advances in AI and deep learning offer opportunities for innovative applications. More and more companies (e.g. Google, Nearmap and PSMA Australia) are investing to create thematic data from satellite and aerial imagery. Nobody is interested in the hype about the new technologies.

Our effort has been to create a few concrete projects where AI and deep learning can bring us real value and tangible benefits. We plan to widen and deepen AI-based analysis and applications especially for property location metrics and risk modelling, including:

-

Keep extracting features more accurately and cost-effectively from imagery and other geospatial data, and creating new data content and property location metrics.

-

Create integrated geospatial modelling. We often combine extracted building shapes with LiDAR point clouds to develop 3D modelling, and on this many examples are available upon request. The thematic data extracted from imagery is to some extent similar to GPS data – it is better to be enriched with many other geospatial data for integrated applications or solutions (e.g. refer to our Location Profile Report project for more, link).

-

Quantify land cover or built environment changes, such as urban growth, and before / after major events.

-

Better explore hazard detection and modelling (e.g. bushfire hotspots detection and flood hazard modelling) using the spatial and temporal patterns that may be revealed by new AI and deep learning techniques.

-

Geocode exposures at a building level. Our next blog will cover a large-scale project that focuses on building-level geocoding of G-NAF (a national address database in Australia) and using more accurate exposure data for improved risk analysis.